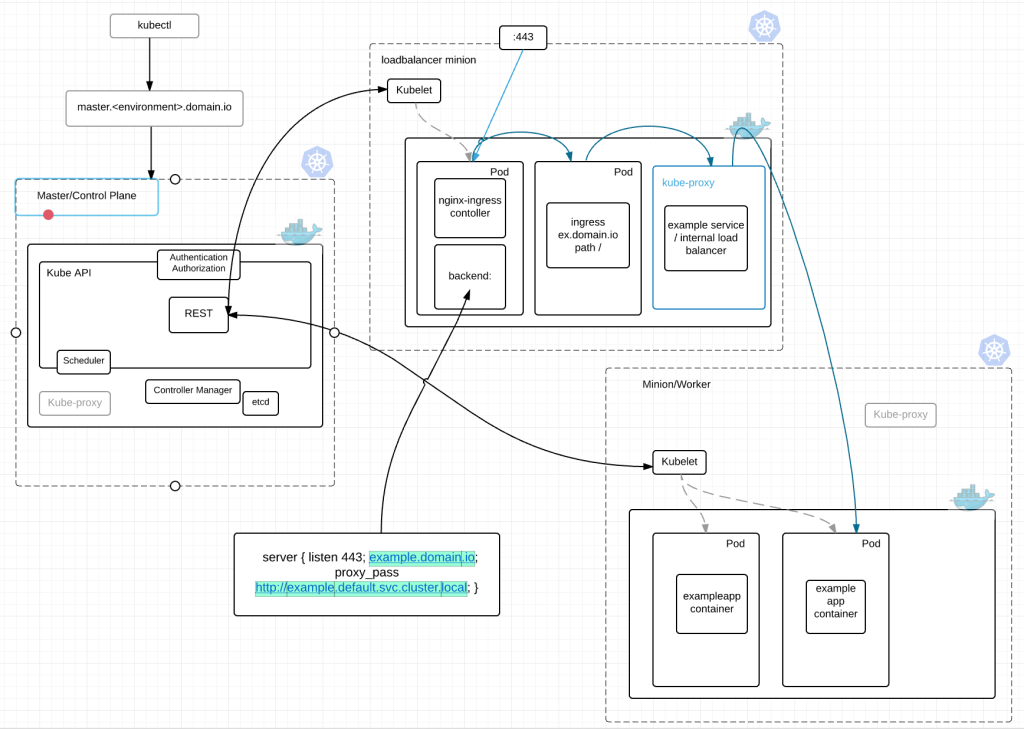

There are two different types of load balancing in Kubernetes. I’m going to label them internal and external.

Internal – aka “service” is load balancing across containers of the same type using a label. These services generally expose an internal cluster ip and port(s) that can be referenced internally as an environment variable to each pod.

Ex. 3 of the same application are running across multiple nodes in a cluster. A service can load balance between these containers with a single endpoint. Allowing for container failures and even node failures within the cluster while preserving accessibility of the application.

External –

Services can also act as external load balancers if you wish through a NodePort or LoadBalancer type.

NodePort will expose a high level port externally on every node in the cluster. By default somewhere between 30000-32767. When scaling this up to 100 or more nodes, it becomes less than stellar. Its also not great because who hits an application over high level ports like this? So now you need another external load balancer to do the port translation for you. Not optimal.

LoadBalancer helps with this somewhat by creating an external load balancer for you if running Kubernetes in GCE, AWS or another supported cloud provider. The pods get exposed on a high range external port and the load balancer routes directly to the pods. This bypasses the concept of a service in Kubernetes, still requires high range ports to be exposed, allows for no segregation of duties, requires all nodes in the cluster to be externally routable (at minimum) and will end up causing real issues if you have more than X number of applications to expose where X is the range created for this task.

Because services were not the long-term answer for external routing, some contributors came out with Ingress and Ingress Controllers. This in my mind is the future of external load balancing in Kubernetes. It removes most, if not all, the issues with NodePort and Loadbalancer, is quite scalable and utilizes some technologies we already know and love like HAproxy, Nginx or Vulcan. So lets take a high level look at what this thing does.

Ingress – Collection of rules to reach cluster services.

Ingress Controller – HAproxy, Vulcan, Nginx pod that listens to the /ingresses endpoint to update itself and acts as a load balancer for Ingresses. It also listens on its assigned port for external requests.

In the diagram above we have an Ingress Controller listening on :443 consisting of an nginx pod. This pod looks at the kubernetes master for newly created Ingresses. It then parses each Ingress and creates a backend for each ingress in nginx. Nginx –> Ingress –> Service –> application pod.

With this combination we get the benefits of a full fledged load balancer, listening on normal ports for traffic that is fully automated.

Creating new Ingresses are quite simple. You’ll notice this is a beta extension. It will be GA pretty soon.

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: example-ingress

spec:

rules:

- host: ex.domain.io

http:

paths:

- path: /

backend:

serviceName: example

servicePort: 443

Creating the Ingress Controller is also quite easy.

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx-ingress

labels:

app: nginx-ingress

spec:

replicas: 1

selector:

app: nginx-ingress

template:

metadata:

labels:

app: nginx-ingress

spec:

containers:

- image: gcr.io/google_containers/nginx-ingress:0.1

imagePullPolicy: Always

name: nginx

ports:

- containerPort: 80

hostPort: 80

Here is an ingress controller for nginx. I would use this as a template by which to create your own. The default pod is in its infancy and doesn’t handle multiple backends very well. Its written in Go but you could quite easily write this in whatever language you want. Its a pretty simple little program.

For more information here is the link to Ingress Controllers at Kubernetes project.

Hi Michael,

Would love to chat if you would be interested in guest blogging for cloud native apps @vmware.

I’ll be in touch. Thanks

Hello ~ Awesome content ~ Thank You

The provided links appear to be broken: “Here is …” and “… link to Ingress Controllers at …”

Thanks friend. I’ve updated the link

Sorry for the rookie question but is it possible to define rules for service to customize load balancing among pods. For example, define a rule for a service with 2 pods which makes 30% of the requests go to pod number 1 and 70% to pod number 2.

Mohsen,

Not at the moment. I would be interested to understand the use case. There are a number of possible scenarios which could accomplish this.

You could create a Kubernetes Headless service which would provide a list of IPs for the pods behind the service. Then use something like Kong (API gateway) to create weighted routing across the pods.

Regards