In the beginning, there was logging ……… AND there were single homed, single server applications, engineers to rummage through server logs, CDs for installing OSs and backup tape drives. Fortunately most everything else has gone the way of the dodo. Unfortunately, logging in large part has not.

When we started our PaaS project, we recognized logging was going to be of interest in a globally distributed, containerized, volatile, ever changing environment. CISO, QA and various business units all have data requirements that can be gathered from logs. All having different use cases and all wanting log data they can’t seem to aggregate together due to the distributed nature of our organization. Now some might think, we’ve done that. We use Splunk or ELK and pump all the logs into it and KA-CHOW!!! were done. Buutttt its not quite that simple. We have a crap ton of applications, tens of thousands of servers, tons of appliances, gear and stuff all over the globe. We have one application that literally uses 1 entire ELK stack by itself because the amount of data its pumping out is so ridiculous.

So with project Bitesize coming along nicely, we decided to take our first baby step into this realm. This is a work in progress but here is the gist. Dynamically configured topics through fluentd containers running in Kubernetes on each server host. A scalable Kafka cluster that holds data for a limited amount of time. Saves data off to permanent storage for long-term/bulk analytics. A Rest API or http interface. A management tool for security of the endpoint.

Where we’re at today is dynamically pushing data into Kafka via Fluentd based on Kubernetes namespace. So what does that mean exactly? EACH of our application stacks (by namespace) can get their own logs for their own applications without seeing everything else.

I’d like to give mad props to Yiwei Chen for making this happen. Great work mate. His image can be found on Docker hub at ywchenbu/fluentd:0.8.

This image contains just a few key fluentd plugins.

fluentd-kubernetes-metadata-filter

fluentd-record-transformer – built into fluentd. No required install.

We are still experimenting with this so expect it to change but it works quite nicely and could be modified for use cases other than topics by namespace.

You should have the following directory in place on each server in your cluster.

Directory – /var/log/pos # So fluentd can keep track of its log position

Here is td-agent.yaml.

apiVersion: v1

kind: Pod

metadata:

name: td-agent

namespace: kube-system

spec:

volumes:

- name: log

hostPath:

path: /var/log/containers

- name: dlog

hostPath:

path: /var/lib/docker/containers

- name: mntlog

hostPath:

path: /mnt/docker/containers

- name: config

hostPath:

path: /etc/td-agent

- name: varrun

hostPath:

path: /var/run/docker.sock

- name: pos

hostPath:

path: /var/log/pos

containers:

- name: td-agent

image: ywchenbu/fluentd:0.8

imagePullPolicy: Always

securityContext:

privileged: true

volumeMounts:

- mountPath: /var/log/containers

name: log

readOnly: true

- mountPath: /var/lib/docker/containers

name: dlog

readOnly: true

- mountPath: /mnt/docker/containers

name: mntlog

readOnly: true

- mountPath: /etc/td-agent

name: config

readOnly: true

- mountPath: /var/run/docker.sock

name: varrun

readOnly: true

- mountPath: /var/log/pos

name: pos

You will probably notice something thing about this config that we don’t like. The fact that its running in privileged mode. We intend to change this in near future but currently fluentd cant read the log files without it. Not a difficult change, just haven’t made it yet.

This yaml gets placed in

/etc/kubernetes/manifests/td-agent.yaml

Kubernetes should automatically pick this up and deploy td-agent.

And here is where the magic happens. Below is td-agent.conf. Which according to our yaml should be located at

/etc/td-agent/td-agent.conf

<source>

type tail

path /var/log/containers/*.log

pos_file /var/log/pos/es-containers.log.pos

time_format %Y-%m-%dT%H:%M:%S.%NZ

tag kubernetes.*

format json

read_from_head true

</source>

<filter kubernetes.**>

type kubernetes_metadata

</filter>

<filter **>

@type record_transformer

enable_ruby

<record>

topic ${kubernetes["namespace_name"]}

</record>

</filter>

<match **>

@type kafka

zookeeper SOME_IP1:2181,SOME_IP2:2181 # Set brokers via Zookeeper

default_topic default

output_data_type json

output_include_tag false

output_include_time false

max_send_retries 3

required_acks 0

ack_timeout_ms 1500

</match>

What’s happening here?

- Fluentd is looking for all log files in /var/log/containers/*.log

- Our kubernetes-metadata-filter is adding info to the log file with pod_id, pod_name, namespace, container_name and labels.

- We are transforming the data to use the namespace as the kafka topic

- And finally pushing the log entry to Kafka.

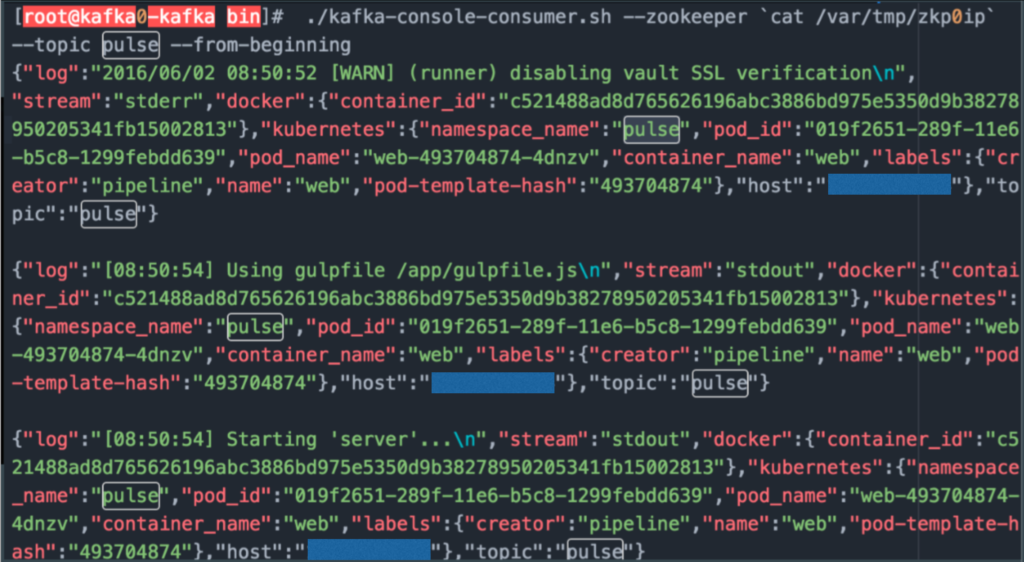

Here is an example of a log file you can expect to get from Kafka. All in json.

Alright so now that we have data being pushed to Kafka topic by namespace what can we do with it?

Next we’ll work on getting data out of Kafka.

Securing the Kafka endpoint so it can be consumed from anywhere.

And generally rounding out the implementation.

Eventually we hope Kafka will become an endpoint by which logs from across the organization can be consume. But naturally, we are starting bitesized.

Please follow me and retweet if you like what you see. Much appreciated.

@devoperandi

Hello,

One quick question : what’s the point of sending the logs to Kafka instead of Elasticsearch ? I don’t get it as you’ll need a search engine at some point to investigate into logs.

Maxime

There are a couple of reasons when we are piping logs to Kafka.

1) We have quite a large number of logs. We currently have an ELK stack dedicated to a single application in one scenario because of the sheer number of logs events (traffic) is pushes.

2) We also have significant number of pre-existing Search platforms through the organization. We didn’t want to be in the business of managing log search. We just want to provide an easy, single point by which various teams could consume the logs based on their need, to include dev, qa, security, business continuity, data analytics etc etc.

By doing this, we have also provided a fairly granular way of providing this service. Example: A security team decides they want to collect all apache/nginx access logs without any application level logs. With our method this is trivial. We have fluentd pipe all access logs from every application to a single kafka topic and the security team consumes them. No filtering, no search indexes just for access logs and a relatively small number of records to consume

hi Michael,

First of all thank you for the post! I’ve tried to use this Docker image with the configuration which you described, but when the pod starts, I get the following message:

…

2016-06-15 11:39:30 +0000 [info]: initialized producer kafka

2016-06-15 11:39:30 +0000 [info]: following tail of …

2016-06-15 11:39:31 +0000 [warn]: failed to expand `%Q[#{kubernetes[“namespace_name”]}]` error_class=NameError error=”undefined local variable or method `kubernetes'”

Do you know what is the issue?

ensure the data from the kubernetes_metatdata is getting added to the log event.

type kubernetes_metadata

I would modify the td-agent.conf to log locally instead of kafka then check the log files to ensure the kubernetes_metadata is getting inserted into the log events.

Mike … sweet. We will be using this 🙂 We need to get all the logs from the servers as well. Now where the hell do I put all this stuf 🙂

Thanks Chris. Glad you like it. Let us know the progress you make. Would love to collaborate at some point.

I couldnt find the dockerfile for the ywchenbu/fluentd:0.8. Can it be made available?

Manish,

I would recommend if you have the time, convert this to Alpine. This is our intent but we have other priorities at the moment. If you do, please please please let us know. We would definitely take advantage of it.

With that said, here is the dockerfile.

FROM ubuntu:14.04

MAINTAINER Yiwei Chen

# td-agent version and docker image descrition

LABEL version="0.12.28" description="td-agent-kafka"

# Ensure there are enough file descriptors for running Fluentd

# need to be latger

RUN ulimit -n 65536

# Disable prompts from apt.

ENV DEBIAN_FRONTEND noninteractive

# Install prerequisites.

RUN apt-get update && \

apt-get install -y -q curl make g++ git && \

apt-get clean && \

rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/*

# Install Fluentd

RUN /usr/bin/curl -L https://td-toolbelt.herokuapp.com/sh/install-ubuntu-trusty-td-agent2.sh | sh

RUN td-agent-gem install --no-document fluentd -v 0.12.28

# Change the default user and group to root.

# Needed to allow access to /var/log/docker/... files.

RUN sed -i -e "s/USER=td-agent/USER=root/" -e "s/GROUP=td-agent/GROUP=root/" /etc/init.d/td-agent

# Install Kafka plugin

RUN td-agent-gem install fluent-plugin-kafka

# Install others plug-in.

RUN td-agent-gem install fluent-plugin-kubernetes_metadata_filter fluent-plugin-kubernetes fluent-plugin-kinesis

# Copy the Fluentd configuration file.

COPY td-agent.conf /etc/td-agent/td-agent.conf

# Run the Fluentd service.

ENTRYPOINT ["td-agent"]