Why an API gateway for micro services?

API Gateways can be an important part of the Micro Services / Serverless Architecture.

API gateways can assist with:

- managing multiple protocols

- working with an aggregator for web components across multiple backend micro services (backend for front-end)

- reducing the number of round trip requests

- managing auth between micro services

- TLS termination

- Rate Limiting

- Request Transformation

- IP blocking

- ID Correlation

- and many more…..

Kong Open Source API Gateway has been integrated into several areas of our platform.

Benefits:

- lightweight

- scales horizontally

- database backend only for config (unless basic auth is used)

- propagations happen quickly

- when a new configuration is pushed to the database, the other scaled kong containers get updated quickly

- full service API for configuration

- The API is far more robust than the UI

- supports some newer protocols like HTTP/2

- https://github.com/Mashape/kong/pull/2541

- Kong continues to work even when the database backend goes away

- Quite a few Authentication plugins are available for Kong

- ACLs can be utilized to assist with Authorization

Limitations for us (not necessarily you):

- Kong doesn’t have enough capabilities around metrics collection and audit compliance

- Its written in Lua (we have one guy with extensive skills in this area)

- Had to write several plugins to add functionality for requirements

We run Kong as containers that are quite small in resource consumption. As an example, the gateway for our documentation site consumes minimal CPU and 50MB of RAM and if I’m honest we could probably reduce it.

Kong is anticipated to fulfill a very large deployment in the near future for us as one of our prime customers (internal Pearson development team) is also adopting Kong.

Kong is capable of supporting both Postgres and Cassandra as storage backends. I’ve chosen Postgres because Cassandra seemed like overkill for our workloads but both work well.

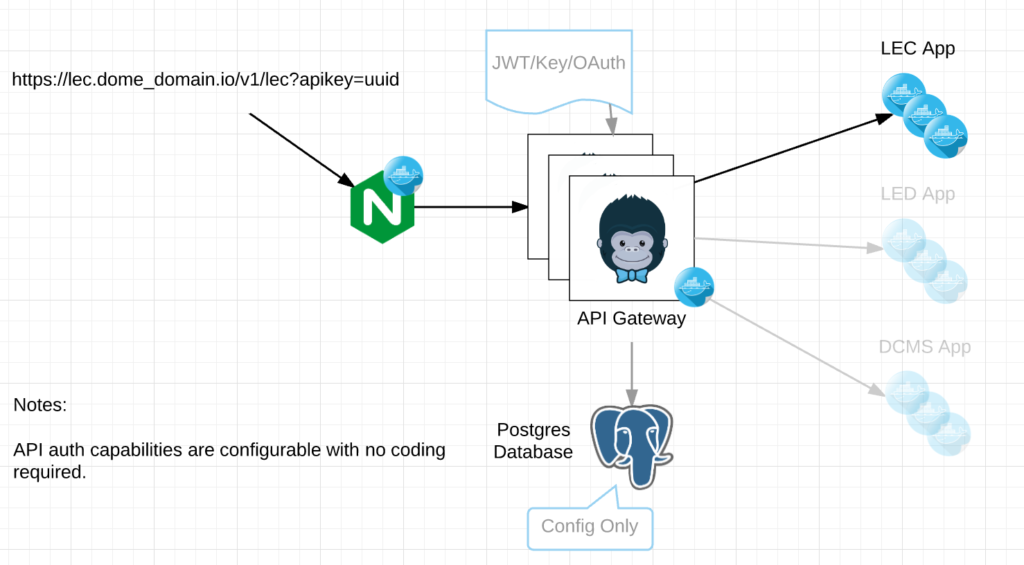

Below is an example of a workflow for a micro service using Kong.

In the diagram above, the request contains the /v1/lec URI for routing to the correct micro service from. In line with this request, Kong can trigger an OAuth workflow or even choose not to authenticate for specific URIs like /health or /status if need be. We use one type of authentication for webhooks and another for users as an example.

In the very near future we will be open sourcing a few plugins for Kong for things we felt were missing.

- rewrite rules plugin

- https redirect plugin (think someone else also has one)

- dynamic access control plugin

all written by Jeremy Darling on our team.

We deploy Kong along side the rest of our container applications and scale them as any other micro service. Configuration is completed via curl commands to the Admin API.

The Admin API properly responds with both a http code (200 OK) and a json object containing the result of the call if its a POST request.

Automation has been put in place to enable us to configure Kong and completely rebuild the API Gateway layer in the event of a DR scenario. Consider this as our “the database went down, backups are corrupt, what do we do now?” scenario.

We have even taken it so far as to wrap it into a Universal API so Kong gateways can be configured across multiple geographically disperse regions that serve the same micro services while keeping the Kong databases separate and local to their region.

In the end, we have chosen Kong because it has the right architecture to scale, has a good number of capabilities one would expect in an API Gateway, it sits on Nginx which is a well known stable proxy technology, its easy to consume, is api driven and flexible yet small enough in size that we can choose different implementations depending on the requirements of the application stack.

Example Postgres Kubernetes config

apiVersion: v1

kind: Service

metadata:

name: postgres

spec:

ports:

- name: pgsql

port: 5432

targetPort: 5432

protocol: TCP

selector:

app: postgres

---

apiVersion: v1

kind: ReplicationController

metadata:

name: postgres

spec:

replicas: 1

template:

metadata:

labels:

app: postgres

spec:

containers:

- name: postgres

image: postgres:9.4

env:

- name: POSTGRES_USER

value: kong

- name: POSTGRES_PASSWORD

value: kong

- name: POSTGRES_DB

value: kong

- name: PGDATA

value: /var/lib/postgresql/data/pgdata

ports:

- containerPort: 5432

volumeMounts:

- mountPath: /var/lib/postgresql/data

name: pg-data

volumes:

- name: pg-data

emptyDir: {}

Kubernetes Kong config for Postgres

apiVersion: v1

kind: Service

metadata:

name: kong-proxy

spec:

ports:

- name: kong-proxy

port: 8000

targetPort: 8000

protocol: TCP

- name: kong-proxy-ssl

port: 8443

targetPort: 8443

protocol: TCP

selector:

app: kong

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: kong-deployment

spec:

replicas: 1

template:

metadata:

labels:

name: kong-deployment

app: kong

spec:

containers:

- name: kong

image: kong

env:

- name: KONG_PG_PASSWORD

value: kong

- name: KONG_PG_HOST

value: postgres.default.svc.cluster.local

- name: KONG_HOST_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

command: [ "/bin/sh", "-c", "KONG_CLUSTER_ADVERTISE=$(KONG_HOST_IP):7946 KONG_NGINX_DAEMON='off' kong start" ]

ports:

- name: admin

containerPort: 8001

protocol: TCP

- name: proxy

containerPort: 8000

protocol: TCP

- name: proxy-ssl

containerPort: 8443

protocol: TCP

- name: surf-tcp

containerPort: 7946

protocol: TCP

- name: surf-udp

containerPort: 7946

protocol: UDP

If you look at the configs above, you’ll notice it does not expose Kong externally. This is because we use Ingress Controllers so here is an ingress example.

Ingress Config example:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

labels:

name: kong

name: kong

namespace: ***my-namespace***

spec:

rules:

- host: ***some_domain_reference***

http:

paths:

- backend:

serviceName: kong

servicePort: 8000

path: /

status:

loadBalancer: {}

Docker container – Kong

https://hub.docker.com/_/kong/

Kubernetes Deployment for Kong

https://github.com/Mashape/kong-dist-kubernetes

@devoperandi